Syphon allows the user to directly send the output of a Processing Sketch into MadMapper, or equivalent program. It only exists for Mac. Here’s how to get it working. Full Source code is at the bottom.

1) Download Syphon for Processing through this link: SyphonProcessing-1.0-RC1.zip

2) Extract the folder from the zip, and look inside. You should see an INSTALL.txt and a folder simply called Syphon.

3) Drag the folder called Syphon into your libraries folder in your Processing folder, which is typically located at /Users/your_name/Documents/Processing/libraries

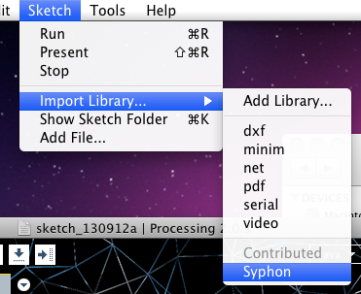

4) Restart processing if it was running, and then go to Sketch>Import Library>Syphon. If it isn’t there, you messed up one of the previous steps :(. This should add an import command at the top of your source code.

5) Add the following both inside and outside your setup function to prepare to send the images:

import codeanticode.syphon.*;

PGraphics canvas;

SyphonServer server;

void setup() {

size(400,400, P3D);

canvas = createGraphics(400, 400, P3D);

// Create syhpon server to send frames out.

server = new SyphonServer(this, "Processing Syphon");

}

Things to note, after importing the syphon library from step 4, we create a variable “canvas” of type PGraphics. We have to do our drawing through this PGraphics instead of the typical method, but it really doesn’t change a whole lot in the end. More on that later. We also create a variable “server” of type SyphonServer which is what ultimately serves the images to MadMapper. In the setup function we declare the size of the screen with size, but add a third argument “P3D”, necessary for syphon to run correctly. We also instantiate the canvas in a similar manner, with a matching resolution. We also construct the server here as well.

6) Now move on to the draw function. Before doing anything we must let Processing know we want to draw to the canvas rather than in the typical manner. To do this, we use canvas.begingDraw(). The normal drawing functions work from here now, but also must be prefixed with “canvas.” (for example canvas.line, canvas.ellipse). Follow that with canvas.endDraw(); to finish writing to the canvas. Example code below:

void draw() {

canvas.beginDraw();

canvas.background(100);

canvas.stroke(255);

canvas.line(50, 50, mouseX, mouseY);

canvas.endDraw();

}

7) You’ll notice at this point that pressing play doesn’t show anything. That’s because we’re drawing to the canvas rather than the sketch itself. To fix this, add the following after canvas.endDraw();.

image(canvas, 0, 0);

The sketch will now show the drawing that is being done to the canvas.

8) Finally we need to tell the server to serve the canvas to MadMapper. Add the following to the end of your draw function and you’ll be set to move on to projecting.

server.sendImage(canvas);

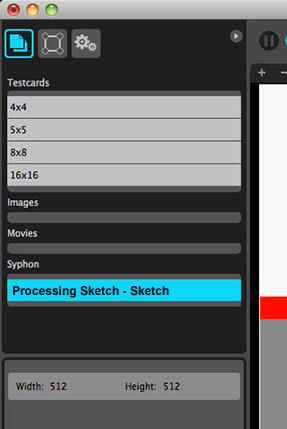

9) Open up MadMapper now and you should be able to load in footage directy from your code, updated in realtime.

I hope that was all clear, feel free to comment below if you have an questions or comments.

Full Processing source with tons of comments: http://pastebin.com/5jLHPca8

you are amazing..thank you so much

You’re welcome, glad I was able to help!

Great tutorial. Made it really simple. Thank you kindly 🙂

Happy to help!

Great tutorial! Thanks for focusing in on the bare bones.

I’m having a problem with my imported library, when I run this:

import codeanticode.syphon.*;

PGraphics canvas;

SyphonClient client;

public void setup() {

size(480, 340, P3D);

println(“Available Syphon servers:”);

println(SyphonClient.listServers());

background(0);

}

I get an error that tells me “no JSyphon in java.library.path”

I successfully installed / imported the library so I’m not sure what I’m doing wrong, any ideas?

Well to be honest, I haven’t had to mess around with the SyphonClient class at all, so I can’t help you with anything specific in terms of its usage. However, I can tell you two generic things that might help.

For starters, it looks like you never instantiate client. You’ll need to add something along the lines of client = new SyphonClient(this), similar to what you see in the tutorial but for client instead of server.

Secondly, you probably meant to type println(client.listServers()); as opposed to what you currently have. Currently you’re trying to call a function on class rather than an instance of said class. See if doing those two things gets you anywhere.

Thanks for the quick reply, I managed to get your code working by exact copy paste, however the recieveFrames example that comes with the library has the same error I’m getting while running this:

import codeanticode.syphon.*;

PGraphics canvas;

SyphonClient client;

void setup() {

size(400,400, P3D);

println(“Available Syphon servers:”);

println(SyphonClient.listServers());

}

Im stumped, I have processing version 2.1.2. Do you think that could have anything to do with it?

Also, not sure if this is of relevance, but I’m trying to get a barebones sketch working on a pc, not a mac.

Sorry, I missed your follow up replies. Yes that is extremely relevant, as unfortunately Syphon only works on Mac. Sorry about that. I put that in the tutorial, but perhaps I will bold it now ha.

Excuse me,May i ask you some questions?

If I use processing example Video mirror with syphon

i connected Arena and processing but the effect of mirror didn’t appear in Arnea.

it appeared the normal picture and the appearance of the picture was upside down in Arena.

Can you help me to solve it?Thank you so much !

Here is my code :

/**

* Mirror

* by Daniel Shiffman.

*

* Each pixel from the video source is drawn as a rectangle with rotation based on brightness.

*/

import codeanticode.syphon.*;

import processing.video.*;

SyphonServer server;

// Size of each cell in the grid

int cellSize = 20;

// Number of columns and rows in our system

int cols, rows;

// Variable for capture device

Capture video;

void setup() {

size(640, 480,P3D);

frameRate(30);

cols = width / cellSize;

rows = height / cellSize;

colorMode(RGB, 255, 255, 255, 100);

// This the default video input, see the GettingStartedCapture

// example if it creates an error

video = new Capture(this, width, height);

// Start capturing the images from the camera

video.start();

//background(0);

server = new SyphonServer(this, “Processing Syphon”);

}

void draw() {

if (video.available()) {

video.read();

video.loadPixels();

// Begin loop for columns

for (int i = 0; i < cols; i++) {

// Begin loop for rows

for (int j = 0; j < rows; j++) {

// Where are we, pixel-wise?

int x = i*cellSize;

int y = j*cellSize;

int loc = (video.width – x – 1) + y*video.width; // Reversing x to mirror the image

float r = red(video.pixels[loc]);

float g = green(video.pixels[loc]);

float b = blue(video.pixels[loc]);

// Make a new color with an alpha component

color c = color(r, g, b, 75);

// Code for drawing a single rect

// Using translate in order for rotation to work properly

pushMatrix();

translate(x+cellSize/2, y+cellSize/2);

// Rotation formula based on brightness

rotate((2 * PI * brightness(c) / 255.0));

rectMode(CENTER);

fill(c);

noStroke();

// Rects are larger than the cell for some overlap

rect(0, 0, cellSize+6, cellSize+6);

popMatrix();

server.sendImage(video);

}

}

}

}

I don’t see any obvious issues, but I have no way to test this code and I am unfamiliar with this Arena program. Sorry I can’t be more help.

Thanks for the great tut.

But after I change the code my frame rate drop to almost a 5 or 6 fps….

I’m running the kinect point cloud code. And the original code was running fine… any idea?

// Daniel Shiffman

// Kinect Point Cloud example

// http://www.shiffman.net

// https://github.com/shiffman/libfreenect/tree/master/wrappers/java/processing

import codeanticode.syphon.*;

PGraphics canvas;

SyphonServer server;

import org.openkinect.*;

import org.openkinect.processing.*;

// Kinect Library object

Kinect kinect;

float a = 0;

// Size of kinect image

int w = 640;

int h = 480;

// We’ll use a lookup table so that we don’t have to repeat the math over and over

float[] depthLookUp = new float[2048];

void setup() {

size(400, 400, P3D);

canvas = createGraphics(400, 400, P3D);

// Create syhpon server to send frames out.

server = new SyphonServer(this, “Processing Syphon”);

kinect = new Kinect(this);

kinect.start();

kinect.enableDepth(true);

// We don’t need the grayscale image in this example

// so this makes it more efficient

kinect.processDepthImage(false);

// Lookup table for all possible depth values (0 – 2047)

for (int i = 0; i < depthLookUp.length; i++) {

depthLookUp[i] = rawDepthToMeters(i);

}

}

void draw() {

canvas.beginDraw();

canvas.background(0);

//canvas.stroke(255);

//canvas.line(50, 50, mouseX, mouseY);

canvas.fill(255);

// canvas.textMode(SCREEN);

//canvas.text("Kinect FR: " + (int)kinect.getDepthFPS() + "\nProcessing FR: " + (int)frameRate, 10, 16);

// Get the raw depth as array of integers

int[] depth = kinect.getRawDepth();

// We're just going to calculate and draw every 4th pixel (equivalent of 160×120)

int skip = 4;

// Translate and rotate

canvas.translate(width/2, height/2, -50);

canvas.rotateY(a);

for (int x=0; x<w; x+=skip) {

for (int y=0; y<h; y+=skip) {

int offset = x+y*w;

// Convert kinect data to world xyz coordinate

int rawDepth = depth[offset];

PVector v = depthToWorld(x, y, rawDepth);

canvas.stroke(255);

canvas.pushMatrix();

// Scale up by 200

float factor = 200;

canvas.translate(v.x*factor, v.y*factor, factor-v.z*factor);

// Draw a point

canvas.point(0, 0);

canvas.popMatrix();

canvas.endDraw();

image(canvas, 0, 0);

server.sendImage(canvas);

}

}

// Rotate

a += 0.015f;

}

// These functions come from: http://graphics.stanford.edu/~mdfisher/Kinect.html

float rawDepthToMeters(int depthValue) {

if (depthValue < 2047) {

return (float)(1.0 / ((double)(depthValue) * -0.0030711016 + 3.3309495161));

}

return 0.0f;

}

PVector depthToWorld(int x, int y, int depthValue) {

final double fx_d = 1.0 / 5.9421434211923247e+02;

final double fy_d = 1.0 / 5.9104053696870778e+02;

final double cx_d = 3.3930780975300314e+02;

final double cy_d = 2.4273913761751615e+02;

PVector result = new PVector();

double depth = depthLookUp[depthValue];//rawDepthToMeters(depthValue);

result.x = (float)((x – cx_d) * depth * fx_d);

result.y = (float)((y – cy_d) * depth * fy_d);

result.z = (float)(depth);

return result;

}

void stop() {

kinect.quit();

super.stop();

}

Awesome tutorial, works perfectly! 🙂 Also helped me understand PGraphics… This will come in very handy.

Does it save on GPU usage if you don’t call image to the Processing display at the end? (i guess it does?)… I turned it off anyway.

It would be useful if you didn’t have to draw the Processing display at the same resolution as the outgoing Syphon res. If Syphon goes out at 1080p it means there’s this massive Processing window there too… any way around that? I tried using if (frame != null) {

frame.setResizable(true);

}

But actually that seems to dynamically change the properties of the sketch – interesting… but not exactly what I’m after :)…

Would be cool to just have a little Processing sketch window there but be sending out in HD… any ideas?

I’m glad I could help! Yes it should save some GPU processing, but I imagine it is probably pretty insignificant as I assume it just draws the existing buffer to the Processing window. Regardless, it is just to preview what is getting sent to MadMapper and as you noticed removing it didn’t break anything.

Have you tried setting the canvas to 1080p and keeping the Processing window to something small? I figure if you already aren’t using the window to preview it should be fine to set it to a different size than the canvas. Unfortunately I have no way of testing this at the moment, so I could be wrong.

Try it out and let me know how it goes!

Hi – and thanks for your tutorial! I am having the problem, that the Processing Sketch doesn’t show up in Madmapper. It says that it is creating a connection, but in the next line it says “stopping”, before it says “releasing” in the next line. Any ideas to, what I do wrong? Or maybe you need some more info?

Thank you, thank you, thank you!

I managed to solve it. But another question came up – how do I make a for loop inside a PGraphics (p1), drawn element in processing? If I just add the “for(…… ” in-between the “beginDraw” and “endDraw”, nothing appears. And I get error messages, if I try putting the p1 before it. Any suggestions?

Hey one quick solution instead of drawing everything to a separate PGraphics is to just use the current one that Processing uses behind the scenes. If you poke through the source code there’s a variable ‘g’ that is the current graphics context.

To output everything to the Syphon server just call:

server.sendImage(g);